Efficiency gains of AI assisted testing in 2024 and near future

27.05.2024

34 VALA QA specialists (specifically in software testing, test automation, and test management) answered our survey on how much they benefit from using AI in their work and how much they think it can increase efficiency in the future.

52% of the respondents are using AI tools in their work. Those who are using them, estimate an average efficiency improvement of 29% in their work.

Test automation experts exhibited the highest usage and most positive outlook on AI tools, while software testers showed the least optimism. The overall sentiment indicates a belief in the efficiency gains brought by AI tools, though there is an expectation of a slowing development curve over time.

Naturally, it is a very difficult task to estimate one’s working efficiency improvement like this. And to estimate it for the future is out right impossible. So bear in mind that these are merely estimations, best guesses and opinions.

Let’s analyze further!

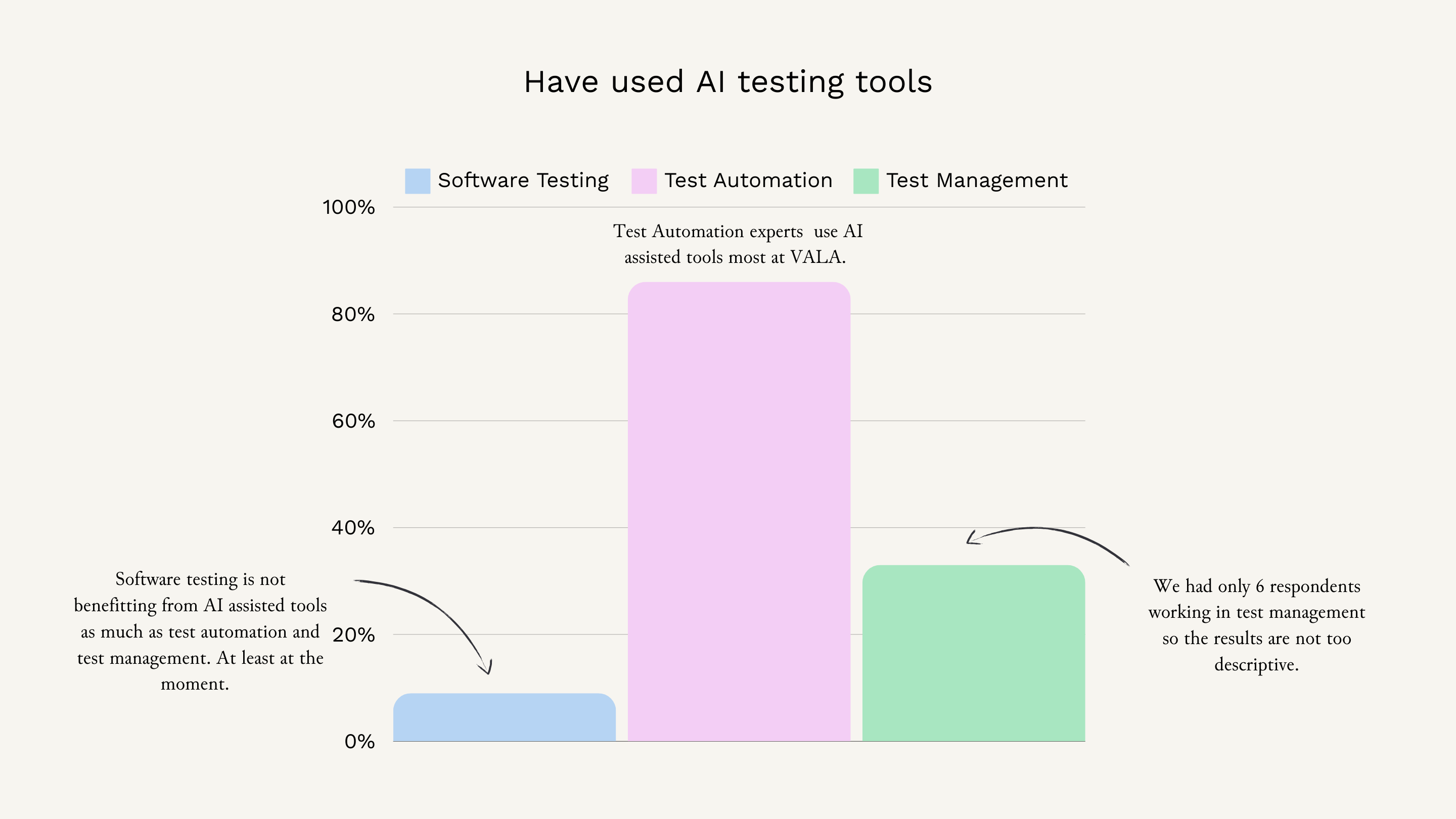

AI Tool Usage in Software Testing, Test Automation, and Test Management

While VALA’s test automation specialists are the most active users of AI tools, software testing specialists have not yet adopted the tools as widely, most likely because they haven’t seen such major gains with it. Test management data is less descriptive due to a smaller sample size but based on this data, test management specialists are somewhere in the middle in usage.

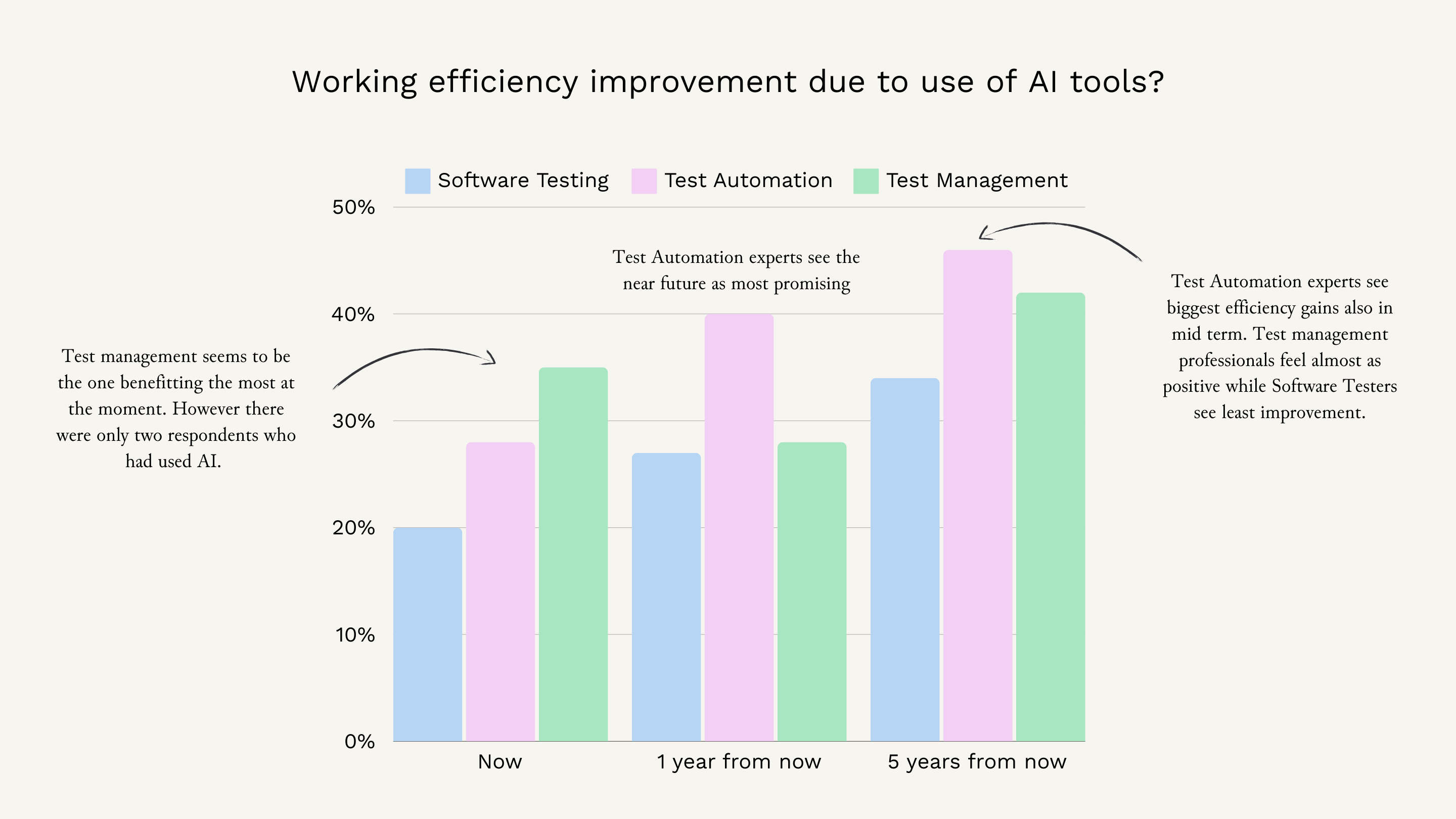

Efficiency Improvement Expectations Over Time

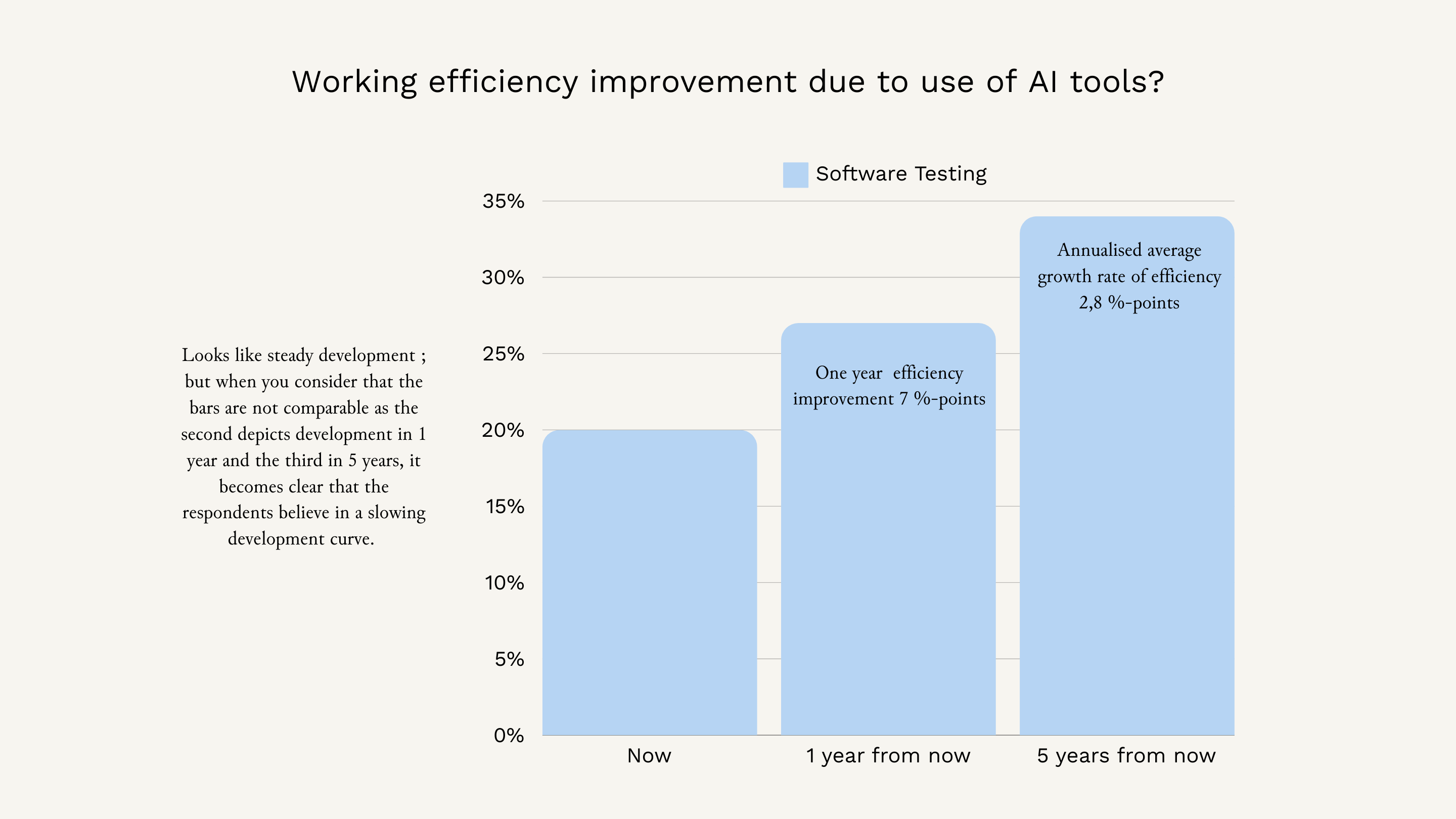

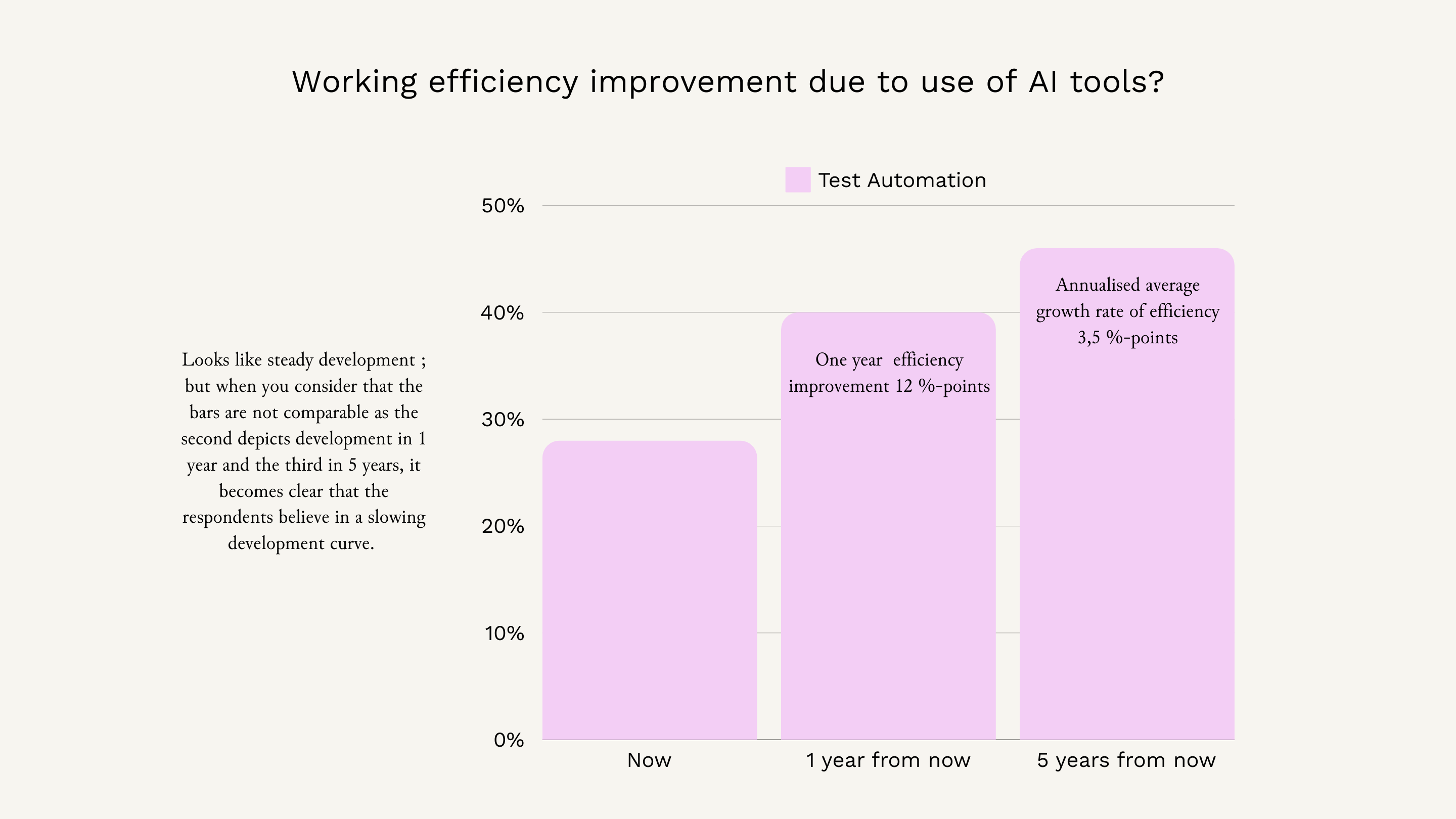

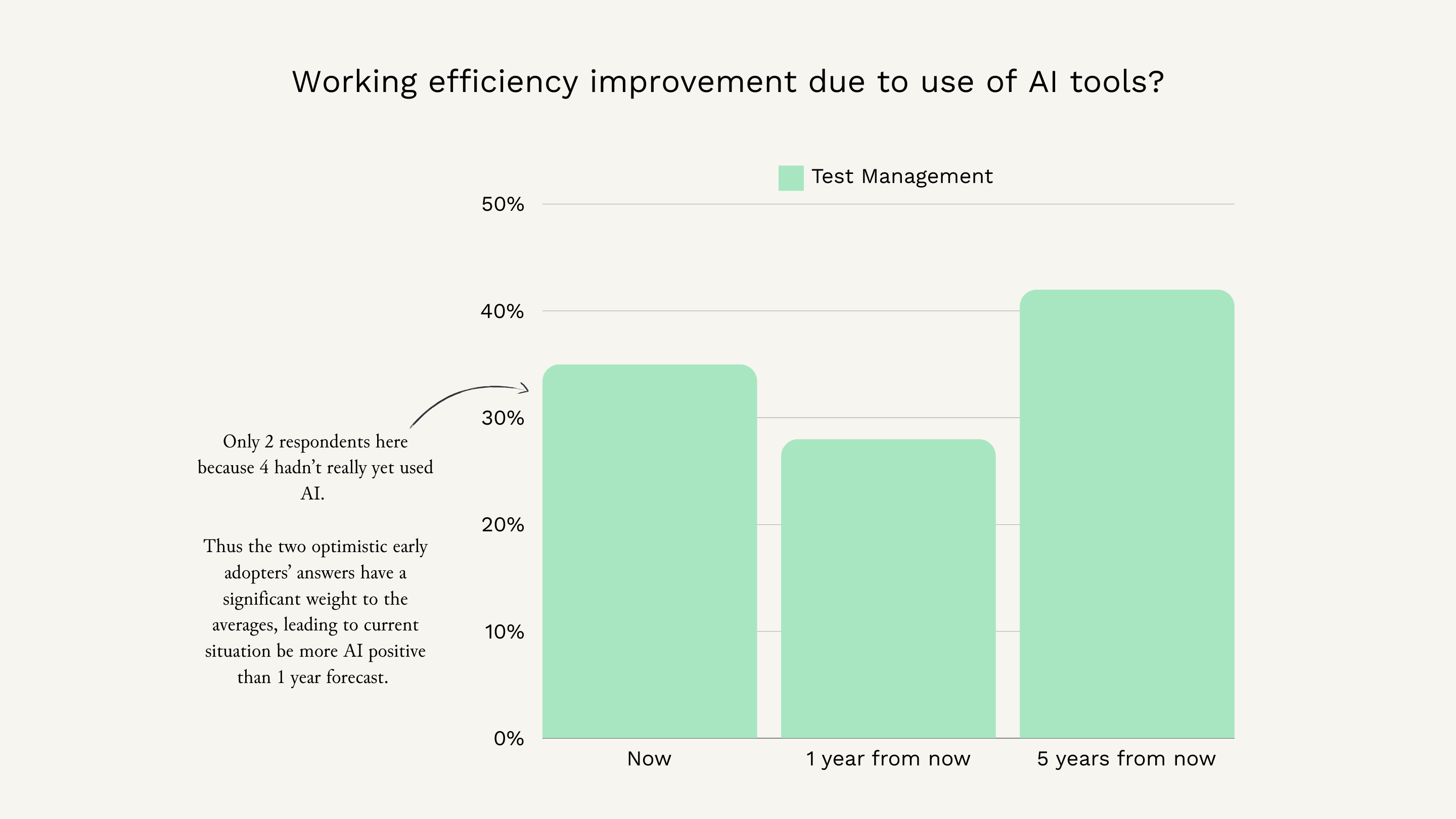

Here we compare the efficiency improvements of using the AI tools at the moment, one year from now, and five years into the future.

Test management professionals have experienced the highest efficiency gains, however, of the only 6 TM respondents, only two had used the tools so far so this is really not comparable. The same goes for Software testing as only 1 of the 11 professionals who responded had used AI tools. So test automation is the only expert group providing more relevant data. Of the test automation specialists who had used the tools, the average efficiency improvement they estimated to have had was 28%.

AI efficiency gains in Software Testing

Software Testers had diverse opinions on the efficiency of AI tools in their work, reflecting a mix of skepticism and cautious optimism.

Some respondents expressed frustration with the current state of AI tools, highlighting that while AI can generate results quickly, these results often lack accuracy. One remarked, “Credible sounding results real fast but with zero regard to truth value. Sounds like more work than before.”

Others found AI tools like Testim to be of limited use in their current roles, as one software tester noted, “I used Testim just for trying it out. I really can’t find a use for it in my current job.”

Despite these challenges, there is an acknowledgment of AI’s potential to assist with certain tasks and the possibility of future improvements. One respondent mentioned using AI for personal learning, saying, “At this moment I would use AI to explain to me some game and betting related things (that are related to my client project) I don’t yet understand.” Another highlighted the difficulty in measuring AI’s impact due to the time required to develop effective prompts, suggesting, “It takes time to form a good prompt and I do things with AI that I would not otherwise do, so probably my efficiency improvement is 0%.” Overall, while some software testers appreciate the convenience of having an AI assistant, they also recognize that significant advancements are needed for AI to become an indispensable tool in their work.

AI efficiency gains in Test Automation

The open comments from test automation engineers reveal a nuanced perspective on the efficiency gains from using AI tools in their work. Many respondents appreciate AI’s ability to automate routine tasks and enhance productivity, as this comment shows: “I mostly use AI to automate routine tasks and write some boring stuff. For example, to develop regular expressions or proof-read texts I write. Or add some “meat” to the text, for example make test cases out of use cases.”

Or this one: “Using AI for writing test cases and anything related to that is a no-brainer. It’s like a jump from coding books to googling.” Others highlighted AI’s utility in generating regular expressions, proof-reading texts, and summarizing data from multiple sources, which saves significant time and effort.

However, there are mixed feelings about the current maturity and practicality of AI tools, especially for more complex and dynamic tasks. Several respondents expressed a desire for more advanced AI capabilities. As one specialist noted, “Currently, I essentially have an AI advisor when what I need is an AI assistant. A tool that would understand my instructions and perform testing autonomously somewhat like a real person would: dynamically based on what they see. Products for this already sort of exist but they don’t seem to be very mature yet and their pricing is rather high.”

Worth noting is that you don’t always know whether you get proper help or if you just end up wasting your time instead of saving it, like this comment suggests: “At the moment, AI chatbots can be useful for broader problems, but when trying to seek something specific, it might not be always able to help you and you’ve wasted time talking to it when you could’ve used the time in seeking the information from more reliable sources.”

The high cost and underdeveloped state of these tools are also concerns. Despite these limitations, there is optimism about future improvements. One comment pointed out, “In a few years, these problems might be a foregone conclusion, and then most of my time would be spent just supervising the AI’s code.”

Overall, while AI tools are seen as quite beneficial, like this comment suggests: “AI is my best friend.” However, there is a clear need for continued advancements to fully realize their potential in test automation.

AI efficiency gains in Test Management

Several respondents praised AI tools like ChatGPT and Copilot as valuable aids in planning and brainstorming. One test manager highlighted, “ChatGPT, Copilot, etc., are very nice sparring partners when thinking about things to take into consideration. It was a great help in putting together a test plan for a project.” Another test manager had similar thoughts, noting that AI can provide a comprehensive framework for QA plans, which significantly speeds up the process of creating customer or product-specific plans.

However, the comments also pointed out that AI tools have their limitations and cannot fully replace human insight and detailed understanding. One respondent mentioned, “AI solutions today produce quite a comprehensive list of things, but they can not replace human thinking and knowledge of specifics.”

Additionally, some test managers observed that the current maturity level of testing tools in their organization might limit the potential benefits of AI. As one put it, “I guess the testing maturity and tooling in the organization is not at the moment on the level that AI assisting tools would bring much more benefits.”

These insights suggest that while AI tools are useful for certain aspects of test management, their full potential is yet to be realized, especially in environments that are still developing their testing infrastructure.

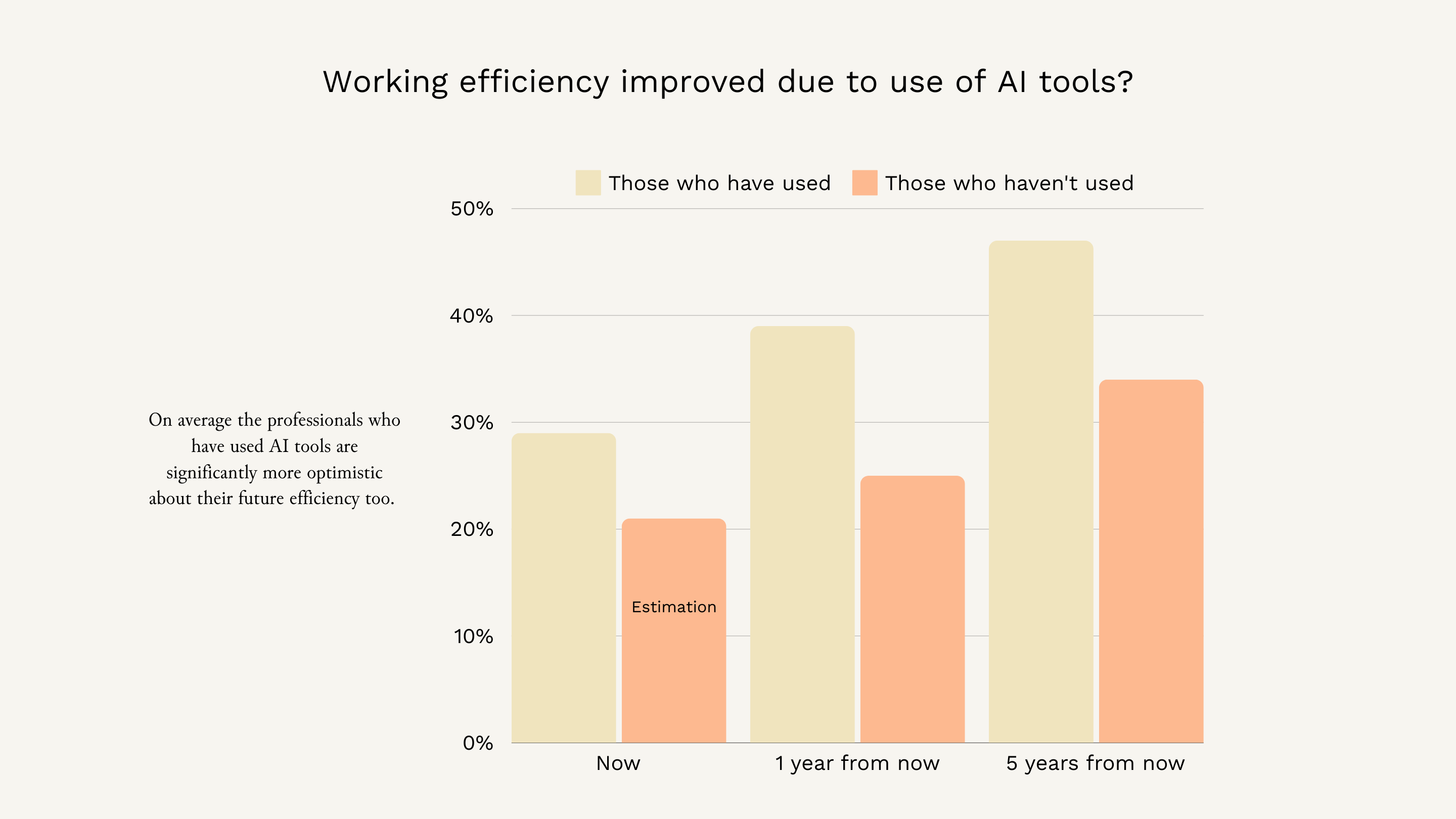

Comparison of those who have used AI assisted testing tools and those who haven’t

52% of the respondents had used AI tools in their work. What comes to the current state, the comparison is naturally false as the rest don’t have real world experience. What is interesting though is that those who haven’t used the tools also believe the efficiency gains would have been smaller compared to the group who have used them.

Those who have used AI tools are also significantly more optimistic about future efficiency gains. This optimism gap suggests that firsthand experience with AI tools positively influences perceptions of their value. It implies that increasing exposure and hands-on experience with AI tools could shift overall sentiment more favorably.

Naturally worth noting is that the ones who haven’t used the tools are mostly software testers and test management specialists who arguably can benefit less from AI tools at the moment in their work.

Conclusions

Among the respondents, 52% are already using these tools, and they estimate an average efficiency improvement of 29% in their work. Test automation experts show the highest usage and most positive outlook on AI tools, whereas software testers remain more skeptical. Despite the optimism about efficiency gains, there is a shared belief that the rate of improvement may slow over time. These estimations are inherently uncertain, reflecting best guesses and personal opinions.

Analysis of different roles reveals that while test automation specialists are the most active users of AI tools, software testers have yet to adopt them widely, likely due to perceived lesser gains. Test management data, although limited, indicates moderate usage. The comments illustrate a range of experiences and expectations, from the ease of automating routine tasks and generating test cases to frustrations with the current maturity of AI tools.

Overall, with the exception of software testers, AI tools are seen as beneficial at the moment at VALA. In the future, VALA people, also software testers, believe efficiency gains will further increase, approximately 40% from now.